Table of Contents

Using GPUs from GWDG's Compute Cluster

GPGPU - General Purpose Computing on Graphics Processor Units

GPGPU generalizes the ability of graphics processing units - to operate in parallel on a large number of pixels of a picture - to perform in parallel numerical operations on a large number of elements of a general array of data.

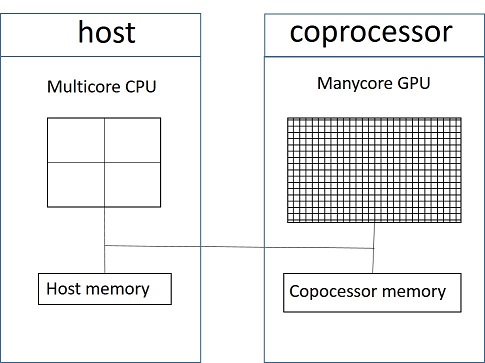

The GPU has a large number of simple processing units which work on data from the GPU's own main memory. The GPU is attached as a coprocessor via the PCI-bus to a multicore host processor, as shown in the following picture.

From the application running on the host suitable parts are split off and transfered for processing to the GPU. There are special programming environments for specifying the host and coprocessor parts of an application. In particular, NVIDIA provides the CUDA programming environment for GPGPU with their graphics coprocessors.

Compute systems for HPC consist of a large number of nodes connected by a high speed network, each node containing a number of multicore cpus and a number of attached GPUs.

The following notes will describe and explain the different ways to use single and mulitiple GPUs on GWDG's scientific compute cluster.

GPUs on GWDG's Scientific Compute Cluster

In July 2017, the following nodes in the cluster are equiped with NVIDIA GPUs:

- gwdo161-gwdo180, each with one GeForce GTX 770

- dge001-dge007, each with two GeForce GTX 1080

- dge008-dge014, each with four GeForce GTX 980

- dge015, with two GeForce GTX 980

- dte001-dte010, each with two Tesla K40m

The GeForce GPUs are ordinary graphics cards with focus on single precision operations, whereas the Tesla GPU has a larger main memory and a larger number of cores for double precision operations as needed for numerical intensive applications.

This is detailed in the following table with properties of the different NVIDIA models.

| Model | Architecture | Compute Capability | Clock Rate [MHz] | Memory [GB] | SP Cores | DP Cores |

|---|---|---|---|---|---|---|

| GeForce GTX 770 | Keppler | 3.0 | 1110 | 2 | 1536 | 0 |

| GeForce GTX 980 | Maxwell | 5.2 | 1126 | 4 | 2048 | 64 |

| GeForce GTX 1080 | Pascal | 6.1 | 1733 | 8 | 2560 | 80 |

| Tesla K40m | Keppler | 3.5 | 745 | 12 | 2280 | 960 |

A simple CUDA Example

In the following, a simple application - adding two vectors - will be given as an example showing the basic mechanism for offloding operations form host to graphics device within the CUDA programming environment.

CUDA is based on a standardized programming language and adds new language constructs to specify the operations to be executed on the GPU, to move data between the memories of host and GPU and to start and synchronize the operations on the GPU. There are CUDA environments for the C, C++ and Fortran languages. In the example the C language will be used.

CUDA programs are stored in files with the suffix .cu . A CUDA program consists of one main program to be executed on the host,

of functions to be executed on the host and of functions to be executed on the GPU device.

Adding two vectors on a GPU is realized by the following program file example_addvectors.cu

#include <stdio.h>

__global__ void add_d( int N, float *a, float *b, float *c ) {

int i = threadIdx.x + blockIdx.x*blockDim.x;

if (i < N) c[i] = a[i] + b[i];

}

int main(void) {

int N = 4, i;

float *a, *b, *c;

float *a_d, *b_d, *c_d;

a = (float *)malloc( sizeof(float)*N );

b = (float *)malloc( sizeof(float)*N );

c = (float *)malloc( sizeof(float)*N );

cudaMalloc( &a_d, sizeof(float)*N);

cudaMalloc( &b_d, sizeof(float)*N);

cudaMalloc( &c_d, sizeof(float)*N);

for (i=0; i<N; i++) {

a[i] = i;

b[i] = 2*i;

}

cudaMemcpy(a_d, a, sizeof(float)*N, cudaMemcpyHostToDevice);

cudaMemcpy(b_d, b, sizeof(float)*N, cudaMemcpyHostToDevice);

add_d<<<1,N>>>(N,a_d, b_d, c_d);

cudaMemcpy(c, c_d, sizeof(float)*N, cudaMemcpyDeviceToHost);

for(i=0; i<N; i++) {

printf( "%f + %f = %f\n", a[i], b[i], c[i] );

}

cudaFree(a_d); cudaFree(b_d); cudaFree(c_d);

}

In the main program, to be executed on the host, memory is allocated for two sets of three vectors:

- host memory for

a,b,cby calls to the standart C allocation functionmalloc - device memory for

a_d,b_d,c_dby calls to the CUDA specific allocation functioncudaMalloc

After initializing the two input vectors a,b in host memory, their content is copied from host memory to device memory by calling the

CUDA function cudaMemcpy.

The offloading of the add operation onto the device is effected by the call add_d<<<1,N>>>(N,a_d, b_d, c_d);

This call instructs the device to execute the function add_d on a configuratiion of device threads defined by the arguments witin the

triple bracket <<<...>>>. In this simple example, the device configuration is one threadblock with N threads.

The function add_d is declared with the CUDA attribute __global__ as a device function to be

executed on the device. Within a device function predefined thread local variables ( threadIdx.x,blockIdx.x,blockDim.x) can be accessed

which give every thread a unique identity allowing every thread to work on different data.

The result of the vector addition is stored on the device memory in c_d. After transfering the content of this vector to host memory with a

further call to a cudaMemcpy function, the result can be inspected on the host.

For a detailed explanation of CUDAs language constructs and the mechanism of mapping CUDA threads onto the cores in the given GPU consult the Proramming Guide in the CUDA Toolkit Documentation. An introduction to GPGPU with CUDA can be found in the presentations of the GWDG course “Parallel Computing with CUDA” at http://wwwuser.gwdg.de/~ohaan/CUDA/

Compiling and Executing CUDA Programs on GWDG's Compute Clusters

The frontend nodes of GWGD's cluster gwdu101, gwdu102, gwdu103 can be used for program development. The compiler nvcc

produces executables from CUDA program files. The environment for CUDA must be prepared by loading the corresponding module file with

the command

module load cuda80

Invoking the compile and link steps with

nvcc -arch=sm_30 example_addvectors.cu -o add.exe

produces the executable add.exe, which now can be started on one of the gpu nodes. With the option -arch=sm_30 code for

using GPUs with Compute Capability 3.0 and higher will be produced, so this executable will run on all GPU models in GWDG's cluster.

Jobs on GWDG's cluster are managed by IBM Spectrum LSF (Load Sharing Facility), formerly IBM Platform LSF. Jobs are submitted to various

queues by the bsub command. The name of the queue for jobs needing a GPU is gpu. Jobs in this queue will be started on

nodes with one or more GPUs.

The executable add.exe now can be submitted with the command

bsub -q gpu -n 1 -R “rusage[ngpus_shared=1]” -o out.%J ./add.exe

The option -n <x> sets the number of cores to be allocated for the job, for add.exe only one core is needed to execute

the host part of the CUDA program.

With the option -R “rusage[ngpus_shared=<x>]” the user declares the amount of gpu-activity of the job. Every node belonging to the

queue gpu has as many gpu shares as cores, irrespective of its number of gpus. LSF limits the total number of shares requested by the

ngpus_shared parameters of runnig jobs on a node to this maximal number.

A job utilizing permanently all gpus of a node

should be submitted with the maximal value for npgus_shared. This will grant this job the exclusive use of all the node's gpus.

Jobs using the gpu only for a small fraction of its total execution time should use a small value for npgus_shared,

thus allowing other jobs sharing the gpu ressources of this node. In particular 24 jobs can run simultaneously on a node with 24 cores,

if they all have been submitted with parameters -n 1 -R “rusage[ngpus_shared=1]”.

With the option -o out.%J the output from the submitted job will be written into a file out.<jobid> in the directory

from which the job was submitted, where <jobid> is the jobnumber LSF has given to this job. Without a -o option

the output of the job will be sent by email to the submitter's email address corresponding to his user id.

The options for the bsub command can be collected into a jobfile. The jobfile lsf.job for the command given above is

#!/bin/sh #BSUB -q gpu #BSUB -n 1 #BSUB -R "rusage[ngpus_shared=1]" #BSUB -o out.%J ./add.exe

which can be submitted with the command

bsub < lsf.job .

More options for the bsub command and the description for other LSF commands can be found at

https://info.gwdg.de/dokuwiki/doku.php?id=en:services:application_services:high_performance_computing:running_jobs

and in the man pages for the LSF commands.

Splitting the Program File

In order to demonstrate the different ways for using multiple gpus in a unified way, it is convenient to separate the program into two files:

a file main_add.c, containing the main program setting up the host environment for the vector addition, and a file add.cu

containing the code for executing the addition on the gpu device.

main_add.c :

#include<stdio.h>

#include<stdlib.h>

void add(int, int, float *, float *, float *);

int main(int argc,char **argv)

{

int dev_nbr = 0, N = 6, i;

float *a, *b, *c;

a = (float *)malloc( N*sizeof(float) );

b = (float *)malloc( N*sizeof(float) );

c = (float *)malloc( N*sizeof(float) );

// Initialize a and b

for (i=0; i<N; i++){

a[i]=i; b[i]=2*i;

}

//call function add from file add.cu

add(dev_nbr,N,a,b,c);

for(i=0; i<N; i++) {

printf( "%f + %f = %f\n", a[i], b[i], c[i] );

}

}

add.cu :

__global__ void add_d( int N, float *a, float *b, float *c ) {

int i = threadIdx.x + blockIdx.x*blockDim.x;

if (i < N) c[i] = a[i] + b[i];

}

extern "C" void add(int dev_nbr, int n, float *A, float *B, float *C)

{ float *a_d, *b_d, *c_d;

cudaSetDevice(dev_nbr);

cudaMalloc( &a_d, sizeof(float)*n);

cudaMalloc( &b_d, sizeof(float)*n);

cudaMalloc( &c_d, sizeof(float)*n);

cudaMemcpy(a_d, A, sizeof(float)*n, cudaMemcpyHostToDevice);

cudaMemcpy(b_d, B, sizeof(float)*n, cudaMemcpyHostToDevice);

add_d<<<1,n>>>(n,a_d, b_d, c_d);

cudaMemcpy(C, c_d, sizeof(float)*n, cudaMemcpyDeviceToHost);

cudaFree(a_d); cudaFree(b_d); cudaFree(c_d);

}

In addition to the CUDA commands in the example_addvectors.cu file, add.cu contains the call to cudaSetDevice(dev_nbr).

This call sets the number of the device to be used for the execution of the following CUDA code. In the main_add.c file the value

for dev_nbr has been set to 0. For using multiple devices, as explained in the next sections, the function add will be called

with different values for dev_nbr.

Furthermore the

add function declaration is prepended by the extern “C” qualifier. This instructs the nvcc compiler, which is a c++ compiler,

to make this function callable from the c program in man_add.c .

Each of the two files now has to be compiled by the appropriate compiler:

gcc -c main_add.c nvcc -c add.cu

For linking of the two generated object files with the gcc compiler the CUDA runtime library has to be included with the -lcudart flag::

gcc main_add.o add.o -lcudart -o add.exe

The submission of the executable add.exe to the cluster now can proceed as explained in the previous section.

Using Several GPUs of a Single Node - Multiple Executables

Each of the n gpus attached to a single node has a unique device number, running from zero to n-1. By default, a CUDA programm running

on the node will use device number 0. A specific device device_number can be selected with the CUDA function

cudaSetDevice(device_number). All device activities following this function call - memory accesses, kernel invocations - will be using

the device device_number .

The simplest way to use the n gpus of a single node in parallel is therefore to prepare n different executables, each with a call

to cudaSetDevice with a different value for the device_number and then running these executables simultaneously on the node.

Let e.g. exe0, exe1 be two executables, one including the function call cudaSetDevice(0),

the other the call cudaSetDevice(1)). Then by submitting the following jobscript 2 gpus of a node will be used in parallel:

#!/bin/sh #BSUB -q gpu #BSUB -W 1:00 #BSUB -o out.%J #BSUB -n 2 #BSUB -R "ngpus=2" #BSUB -R "rusage[ngpus_shared=24]" ./exe0 > out0 & ./exe1 > out1 &

This script requires 2 cores of a node belonging to the queue gpu. Furthermore, by the option -R “ngpus=2, the job

will be submitted to a node with 2 gpus. The request for 24 gpu shares guaranties the job exclusive use of this node (because in GWDG's

cluster all nodes with 2 gpus have 24 cores). The & after the commands for starting the executables causes the asyncronous execution of

these commands, such that the 2 executables will run simultaneously.

Using Several GPUs of a Single Node - Multiple Threads

In a multithreaded execution environment, every thread uses exclusively the device, which is set within the thread by the

cudaSetDevice command; if no device is set, the thread will use the device with the default device number 0. This mechanism

can be used to distribute work for simultaneous execution on several gpus of a single node. In the following example, the OpenMP

programming environment will be employed for the management of multiple threads.

Again the program part for setting up the host environment will be collected into a separate file, main_add_omp.c, which in this

case has to start multiple threads, each of which will call the function add from the file add.cu.

The number of num_gpus connected to a node can be enquired by a call to the CUDA function

cudaGetDeviceCount(&num_gpus) . This number will be returned in the main part by a call to the function devcount(), which is

included in the cu-file add.cu :

extern "C" int devcount(){

int num_gpus; cudaGetDeviceCount(&num_gpus);

return num_gpus;

}

The main program in main_add_omp.c sets the number of threads to be used

by the OpenMP function call

omp_set_num_threads(num_gpus) ,

and this number of threads is started by the OpenMP compiler directive

#pragma omp parallel default(shared) .

With the default(shared) clause every variable declared before this directive will be globally shared, i.e. every thread will

use the same address accessing these variables. On the other hand all variables declared within the parallel region

following the omp parallel directive will be threadlocal.

Within the parallel region the call to the OpenMP function int tid = omp_get_thread_num() will set

the local variable tid in each thread to a different number between 0 and num_gpus-1 . In the call to the fucntion add the

device nummer is set to the local value of tid, such that every thread will activate the add function on a different device.

The following code shows how every thread defines its own range of indices, for which the vector addition will be performed.

main_add_omp.c :

#include <stdio.h>

#include <stdlib.h>

#include <omp.h>

extern void add(int, int, float *, float *, float *);

extern int devcount();

int main(void) {

int N = 1000, i;

float *a, *b, *c;

a = (float *)malloc( sizeof(float)*N );

b = (float *)malloc( sizeof(float)*N );

c = (float *)malloc( sizeof(float)*N );

for (i=0; i<N; i++) {

a[i] = i;

b[i] = 2*i;

}

// num_gpus: number of gpus on the node

int num_gpus = devcount();

int nrct= N/num_gpus; int nrpl = N -nrct*num_gpus;

//activate num_gpus threads

omp_set_num_threads(num_gpus);

#pragma omp parallel default(shared)

{ int tid = omp_get_thread_num();

// n_loc: number of elements for this thread

int n_loc = nrct; if (tid < nrpl) n_loc = nrct+1;

// offs: offset in global vector

int offs = tid*(nrct+1); if (tid >= nrpl) offs = (tid*nrct+nrpl);

// gpu with device number tid adds elements with indices offs to offs+n_loc-1

printf("%i %i %i \n",tid,n_loc,offs);

add(tid,n_loc,&a[offs],&b[offs],&c[offs]);

}

for(i=0; i<3; i++) {

printf( "%f + %f = %f\n", a[i], b[i], c[i] );

}

for(i=N-3; i<N; i++) {

printf( "%f + %f = %f\n", a[i], b[i], c[i] );

}

}

The compilation and link commands for this program are

gcc -fopenmp -c main_add.c nvcc -c add.cu gcc -fopenmp main_add.o add.o -lcudart -o add.exe

A jobfile for submitting this executable to a node with 2 gpus and requesting exclusive use of the gpus is

#BSUB -q gpu #BSUB -W 0:05 #BSUB -n 2 #BSUB -o out.%J #BSUB -R "ngpus=2" #BSUB -R "rusage[ngpus_shared=24]" ./add.exe

Using GPUs on Different Nodes - Multiple MPI Tasks

GWDG's cluster has a number of nodes containing gpus, which are connected by an Infiniband high speed network. In order to use

simultaneously the gpus on different nodes, the message passing MPI framework for distributed computing will be used. The main program file

main_add_mpi.c below contains the code for the MPI tasks to be executed on different nodes, and on each node the

CUDA addition program in add.cu is invoked to perform the addition of the local vector elements on its gpu device with

device number 0.

main_add_mpi.c

#include "mpi.h"

#include<stdio.h>

#include<stdlib.h>

extern add(int, int, float *, float*, float *);

int main(int argc,char **argv)

{

int N = 1000, i, np, me, ip;

float *a, *b, *c, *a_n, *b_n, *c_n;

MPI_Init(&argc,&argv);

MPI_Comm_size(MPI_COMM_WORLD,&np);

MPI_Comm_rank(MPI_COMM_WORLD,&me);

// allocate and initialize global vectors

if (me == 0) {

a = (float *)malloc( sizeof(float)*N );

b = (float *)malloc( sizeof(float)*N );

c = (float *)malloc( sizeof(float)*N );

for (i=0; i<N; i++) {

a[i] = i;

b[i] = 2*i;

}

}

int nrct= N/np; int nrpl = N -nrct*np;

// n_loc: number of elements on this task

int n_loc = nrct; if (me < nrpl) n_loc = nrct+1;

// offs: offset in global vector

int offs = me*(nrct+1); if (me >= nrpl) offs = (me*nrct+nrpl);

// task me adds elements of global vector with indices offs to offs+n_loc-1

// allocate and initialize local vectors

a_n = (float *)malloc( n_loc*sizeof(float) );

b_n = (float *)malloc( n_loc*sizeof(float) );

c_n = (float *)malloc( n_loc*sizeof(float) );

MPI_Allgather(&offs,1,MPI_INT,displs,1,MPI_INT,MPI_COMM_WORLD);

MPI_Allgather(&n_loc,1,MPI_INT,counts,1,MPI_INT,MPI_COMM_WORLD);

MPI_Scatterv(a,counts,displs,MPI_FLOAT,a_n,counts[me],MPI_FLOAT,0,MPI_COMM_WORLD);

MPI_Scatterv(b,counts,displs,MPI_FLOAT,b_n,counts[me],MPI_FLOAT,0,MPI_COMM_WORLD);

int dev_nbr = 0;

add(dev_nbr,counts[me],a_n,b_n,c_n);

MPI_Gatherv(c_n,counts[me],MPI_FLOAT,c,counts,displs,MPI_FLOAT,0,MPI_COMM_WORLD);

if (me == 0) {

for (i=0; i<3; i++) {

printf( "%f + %f = %f\n", a[i], b[i], c[i] );

}

for(i=N-3; i<N; i++) {

printf( "%f + %f = %f\n", a[i], b[i], c[i] );

}

}

MPI_Finalize();

}

The main program starts by allocationg memory for the global vectors a,b,c and by initializing the

global input vectors a,b in task zero.

The first index and the number of indices to be handled by the different tasks are stored in the integer arrays displs and

counts. Then memory for local vectors a_n,b_n,c_n is allocated. The global input vectors a,b are distributed

to the corresponding local vectors a_n,b_n

of the different tasks using the MPI function MPI_Scatterv. Now every task calls the add function to perform the addition of its

vector pieces on its own gpu device. Finally the local results in c_n will be collected from the different tasks into the

global vector c in task 0 with theMPI function MPI_Gatherv.

For the compilation of the MPI code in main_add_mpi.c a compiler supporting an implemention of MPI has to used. On GWDG's cluster

Intel-MPI and OpenMPI are available. The OpenMPI implementation based on the gcc compiler will be used here. The corresponding module file

for OpenMPI must be loaded in addition to the cuda80 module file with the command

module load openmpi/gcc

. The compile and link steps are

mpicc -c main_add_mpi.c nvcc -c add.cu mpicc main_add_mpi.o add.o -lcudart -o add.exe

With the following jobfile this executable will be submitted to run on two nodes:

#BSUB -q gpu #BSUB -W 0:05 #BSUB -n 2 #BSUB -o out.%J #BSUB -m "dte[001-010]" #BSUB -R "span[ptile=1]" #BSUB -R "rusage[ngpus_shared=24]" #BSUB -a openmpi mpirun.lsf ./add.exe

The option #BSUB -m “dte[001-010]” specifies the range of nodes for the execution of the program, with #BSUB -R “span[ptile=1]”

only one task is running on a node such that the two tasks for this job will run on different nodes and #BSUB “rusage[ngpus_shared=24]”” will give

exclusive use of the nodes' gpu for this job.

Using GPUs on Different Nodes - More than One GPU per Node

The previous section describes the use of multiple gpus from different nodes, with the restriction that on every node only one gpu is used.

But most of the nodes in GWDG's cluster equipped with gpus have two or more devices. The MPI parallelization can easily be generalized to

allow several devices on each node to be used. With the MPI function MPI_Get_processor_name every task can determine the node

it is running on. The function dev_nbr_node collects the node names for all tasks into a list and determines for each task number

a device number, such that tasks running on the same node get different device numbers:

int dev_nbr_node(int tid) {

int me, np, ip;

MPI_Comm_rank( MPI_COMM_WORLD, &me );

MPI_Comm_size( MPI_COMM_WORLD, &np );

int namelen = 60, len;

char names[np][namelen],name[namelen];

MPI_Get_processor_name( name, &len );

MPI_Allgather( name, namelen, MPI_CHAR, names, namelen, MPI_CHAR, MPI_COMM_WORLD );

int ct = -1, dev_nbr;

for (ip=0; ip<np; ip++) {

if (strcmp(names[me],names[ip])==0){

ct = ct + 1;

if (tid == ip) dev_nbr = ct;

}

}

return dev_nbr;

}

The compile and link steps for this generalization of the combined MPI-CUDA program are the same as in the previous section. An example for a jobfile for the submission of a job using a total of 4 gpus on two nodes is the following:

#BSUB -q gpu #BSUB -W 0:05 #BSUB -n 4 #BSUB -o out.%J #BSUB -m "dte[001-010]" #BSUB -R "span[ptile=2]" #BSUB -R "rusage[ngpus_shared=24]" #BSUB -a openmpi mpirun.lsf ./a.out

The use of multiple gpus in multiple nodes can also be achieved with an hybrid approach by starting one MPI task on every node and by spawning in every task as many OMP threads as gpus to be used on the node.

A Real World Application: Diffusion

In the vector addition example every gpu just had to add its share of elements of the two vectors, no information had to be exchanged between different gpus. In the following the simulation of diffusion on a two-dimensional grid will be discussed as an application, in which data exchange between different gpus is required. Diffusion describes the change of some property , e.g. temperatur, over time in a given domain of space, starting from a given initial distribution of this property and given fixed values for the property on the boundary of the domain.

Diffusion will be simulated by calculating a new value of the property to be diffused at a grid point by combining the old values on this point and on the four neighbouring points, and iterating this update procedure a number of times.

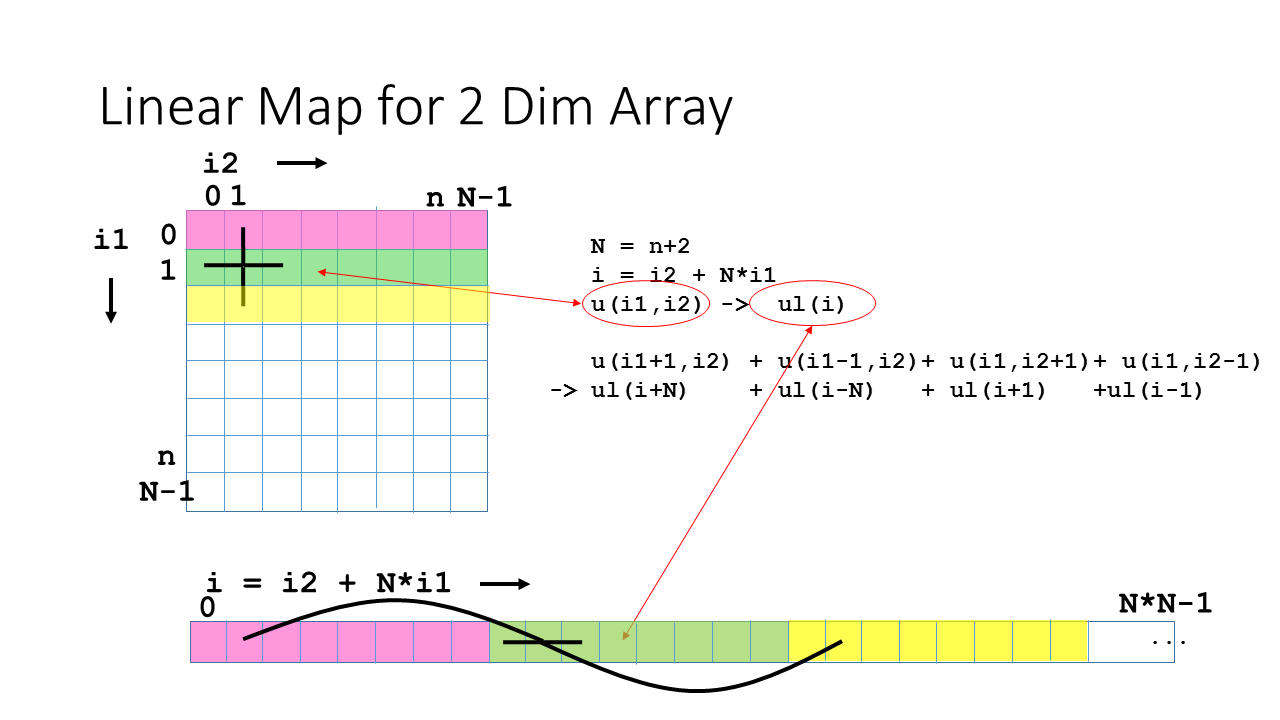

The following picture shows in its upper part the two dimensional grid of size N x N of values u(i1,i2), where each index runs from 0 to N-1. Only the values of the inner grid points with i1,i2 = 1,..,n=N-2 will be updated, the boundary values with i1 = 0 or N-1 and i2 = 0 or N-1 will stay fixed at their values given by the boundary condition of the problem. In the memory the two dimensinal array u(i1,i2) will be stored as a one dimensional contiguous sequence of values u(i), i = 0,…,N*N-1. The lower part of the picture visualizes the mapping u(i1,i2) → u(i) with the choice i = i2+N*i1, correponding do string together the rows of the two dimensional array. Also shown in the picture is the neighbourhood for updating the value on a particular point, both in the two dimensional and the linear setting.

The c-function update calculates the update for the general situation of a rectangular grid of size (n1+2)*n2+).

It reads the old values of the property from arrray u and stores the updated values in array v. Notice that all (n1+2)*(n2+2) elements

of array a with the old values are needed in order to calculate the inner n1*n2 elements of array v with the updated values.

void update (int n1, int n2, float *u, float *v)

{

int i1, i2, i;

float r = 0.2f, s = 1.f - 4.f*r;

for ( i2 = 1; i2 <= n2 ; i2++ ) {

for ( i1 = 1; i1 <= n1 ; i1++ ) {

i = i2 +(n2+2)*i1;

v[i] = s* u[i]

+ r * ( u[i-1] + u[i+1] + u[i-n2-2] +u[i+n2+2]);

}

}

}

The corresponding cuda-function update_d to be executed on a gpu is

__global__ void update_d( int n1, int n2, const float * __restrict__ u,

float* __restrict__ v) {

int i2 = 1+threadIdx.x + blockIdx.x*blockDim.x;

int i1 = 1+threadIdx.y + blockIdx.y*blockDim.y;

int i = i2 + (n2+2)*i1;

float r = 0.2f, s = 1 - 4*r;

if( i1 <= n1 && i2 <= n2 ) v[i] = s* u[i]

+ r * ( u[i-1] + u[i+1] + u[i-n2-2] +u[i+n2+2]);

}

As in the vector addition example the complete code for the simulation of diffusion will be split into two parts: a file main_diff.c containing the main program and all functions with code to be executed on the host, and a file diff_single_gpu.cu containing all functions containing code to be executed on a gpu.

Main program:

int main (int argc, char **argv)

{

int n, nt, n_thrds;

/* ------------ input of run parameters ---------------- */

scanf("%d %d ", &nt, &n);

printf("\nEingegebene Werte: \n nt = %d,\t n = %d\n", nt, n);

/* ------------ allocate global array ------- */

int size_gl = (n+2)*(n+2)*sizeof(float); float *u; u = malloc( size_gl );

/* ------------ initialize initial and boundary values ------- */

initialize (n, u);

/* --------- itertate updates -------------- */

diffusion(n,nt,u);

/* ------------ output of results ------------------------------ */

printf("\n\n mean value for field: %16.14f \n", mean_value(n,u));

The main program invokes the function diffusion, whose definition, containing cuda code, is stored in diff_sinle_gpu.cu:

extern "C" void diffusion(int n, int nt, float *u_h)

{

int il, size = (n+2)*(n+2)*sizeof(float);

float *u; cudaMalloc((void **) &u, size);

float *v; cudaMalloc((void **) &v, size);

cudaMemcpy(u, u_h, size, cudaMemcpyHostToDevice);

cudaMemcpy(v, u_h, size, cudaMemcpyHostToDevice);

int blksz_x = 32, blksz_y = 32;

int grdsz_x = (n+2+blksz_x-1)/blksz_x, grdsz_y = (n+2+blksz_y-1)/blksz_y;

dim3 blks(blksz_x,blksz_y); dim3 grds(grdsz_x,grdsz_y);

for ( il = 1; il<=nt; il++) {

update_d<<<grds,blks>>>(n,n,u,v);

update_d<<<grds,blks>>>(n,n,v,u);

}

cudaMemcpy(u_h, u, size, cudaMemcpyDeviceToHost);

cudaFree(u); cudaFree(v);

}

As in the vector addition case, the function diffusionto be invoked from the c program must have the qualifier extern “C”.

The execution configuration <<<grds,blks>>> is a two dimensional set of (n+2) x (n+2) threads. This allows the

simple specification of the indices of the inner points of the property field in the device function update_d.

Since a single block of threads in cuda can have at most 1024 threads, this two dimensional set is partitioned into

a two dimensional grid of two dimensional blocks of threads.

The complete files main_diff.c and diff_single_gpu can be found

here.

Parallel Diffusion with Multiple GPUs

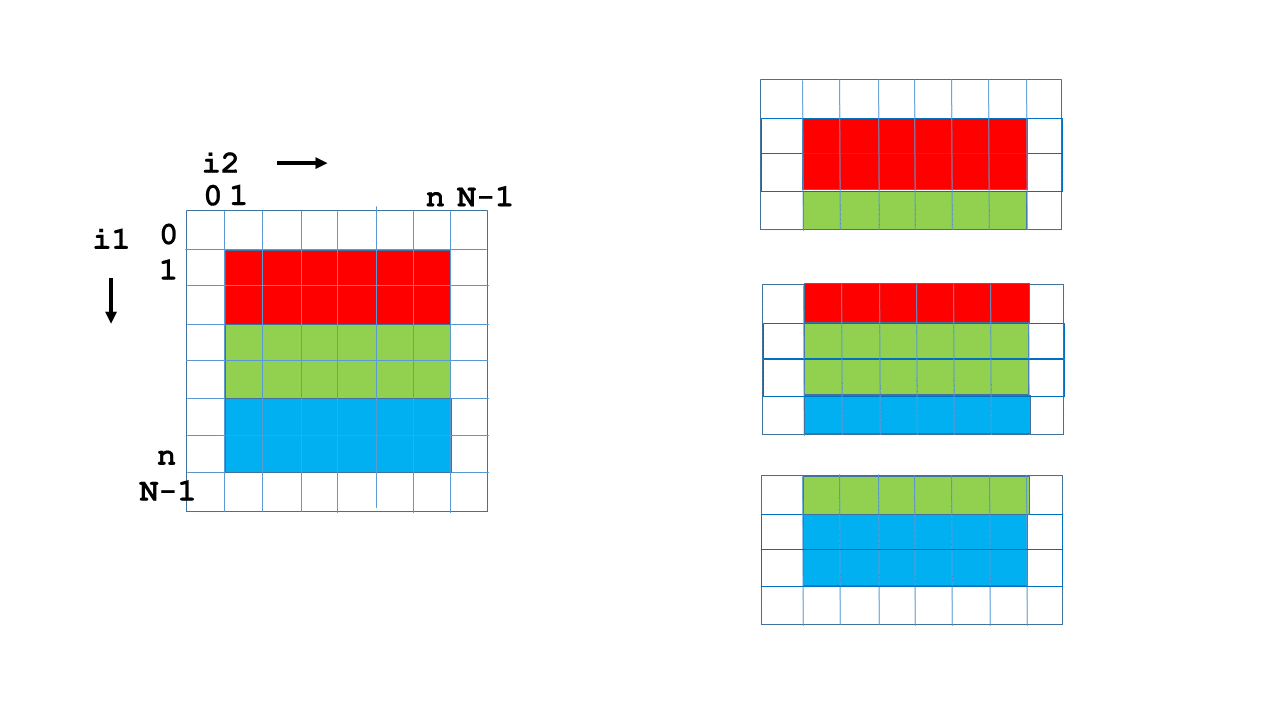

The obvious way to simulate diffusion on n_gpu devices is to distribute the n*n values of the property field among the available

devices, e.g. in a row-wise manner as shown in the following picture.

In order to calculate the updated values for its own rows, each device not only needs the old values in these rows but also the old values of the boundary rows, which belong to the neighbouring devices. Therefore after each update step in the diffusion function the devices have to exchange their first and last rows with the neighbouring devices. Because each device memory can communicate only with the host memory, the data exchange between devices proceeds in three steps:

- each device copies the first and last row of newly calculated values to separate places in host memory

- in host memory, the rows coming from neighbouring devices are exchanged in an appropriate way

- each device copies from host memory the needed boundary rows

The main program and other functions with c code will be collected in main_diff_omp.c.

int main (int argc, char **argv)

{

int n, nt, n_gpus;

float *u;

/* ------------ input of run parameters ---------------- */

scanf("%d %d %d", &n_gpus, &nt, &n);

printf("\nEingegebene Werte: \n n_thrds = %d, nt = %d,\t n = %d\n", n_gpus, n

t, n);

/* ------------ allocate global array ------- */

int size_gl = (n+2)*(n+2)*sizeof(float); u = malloc( size_gl );

/* ------------ initialize initial and boundary values ------- */

initialize (n, u);

/* ------------ allocate boundary arrays------- */

int size_h = (n+2)*n_gpus*sizeof(float);

cpf = malloc( size_h ); cpl = malloc( size_h );

/* --------- set up partioning according to the number of gpus-- */

int nrct= n/n_gpus; int nrpl = n -nrct*n_gpus;

omp_set_num_threads(n_gpus);

#pragma omp parallel default(shared)

{ int tid = omp_get_thread_num();

double tl =get_el_time();

// n_rows: number of rows to be updated by this thread

int n_rows = nrct; if (tid < nrpl) n_rows = nrct+1;

// offs: offset of local array

int offs = tid*(nrct+1); if (tid >= nrpl) offs = (tid*nrct+nrpl);

offs = offs*(n+2);

/* --------- itertate -------------- */

diffusion(tid,n_gpus,n_rows,n,nt,&u[offs]);

}

/* ------------ output of results ------------------------------ */

printf("\n\n mean value for field: %16.14f \n", mean_value(n,u));

}

In addition to the case of a single gpu, the main-function here allocates copy arrays cpf and cpl,

each of size n_gpus*n*sizeof(float), which will store the first rsp. last row of each gpu's array partition.

Then n_gpus threads are generated and the number of rows to be updated by each gpu and the offset of each gpu's array partition in

the global array is determined, before each thread calls the function diffusion, which now has the thread id and the total number of

threads as additional parameters.

main_diff_omp contains also the thread local function to exchange the copies of first and last rows coming from neighbouring gpus,

which will be invoked from the diffusion function in diff_mult_gpu.cu :

void *cpf, cpl;

void exchange(int tid, int n_gpus, int n, float *linef, float *linel)

{

int sil = n*sizeof(float);

if (n_gpus>1)

{

memcpy(&cpf[tid*n],linef,sil); memcpy(&cpl[tid*n],linel,sil);

#pragma omp barrier

if (tid ==0)

{ memcpy(linel,&cpf[(tid+1)*n],sil); }

else if (tid==n_gpus-1)

{ memcpy(linef,&cpl[(tid-1)*n],sil); }

else

{ memcpy(linel,&cpf[(tid+1)*n],sil);

memcpy(linef,&cpl[(tid-1)*n],sil); }

#pragma omp barrier

}

}

Of course, the gpu working on the first partition of the array only exchanges a row with its lower neighbour and the gpu working

on the last partition only exchanges a row with its upper neighbour. The omp barrier pragmas are important, they ensure that all gpus

have finished the updates of their partition, such that the new value of first and last rows are available for exchange.

The diffusion function in diff_mult_gpu.cu is the following:

extern "C" void diffusion(int tid, int n_gpus, int n1, int n2, int nt, float *u_h)

{

int il, size = (n1+2)*(n2+2)*sizeof(float), sil = n2*sizeof(float);

float *linef; linef= (float *)malloc(sil); float *linel; linel = (float *)malloc(sil);

cudaSetDevice(tid);

float *u; cudaMalloc((void **) &u, size);

float *v; cudaMalloc((void **) &v, size);

cudaMemcpy(u, u_h, size, cudaMemcpyHostToDevice);

cudaMemcpy(v, u, size, cudaMemcpyDeviceToDevice);

int blksz_x = 1024, blksz_y = 1;

int grdsz_x = (n2+2+blksz_x-1)/blksz_x, grdsz_y = (n1+2+blksz_y-1)/blksz_y;

dim3 blks(blksz_x,blksz_y); dim3 grds(grdsz_x,grdsz_y);

for ( il = 1; il<=nt; il++) {

update_d<<<grds,blks>>>(n1,n2,u,v);

border(tid, n_ngpus, n1, n2, v , linef, linel);

update_d<<<grds,blks>>>(n1,n2,v,u);

border(tid, n_gpus, n1, n2, u , linef, linel);

}

cudaMemcpy(u_h, u, size, cudaMemcpyDeviceToHost);

cudaFree(u); cudaFree(v);

}

The only difference to the analogous function for the single gpu case are the additional parameters tid and n_gpus which are

needed to differentiate the work on the different gpus and the invoking of the border function after every update. This function

is responsible for the exchange of boundary rows between the gpus and has the following form:

extern "C" void border(int tid, int n_gpus, int n1, int n2, float *v , float *linef, float *linel){

int sil = n2*sizeof(float);

cudaMemcpy(linef,&v[1+n2+2],sil,cudaMemcpyDeviceToHost);

cudaMemcpy(linel,&v[1+n1*(n2+2)],sil,cudaMemcpyDeviceToHost);

exchange(tid,n_gpus,n2,linef,linel);

if (n_gpus>1)

{

if (tid ==0)

{ cudaMemcpy(&v[1+(n1+1)*(n2+2)],linel,sil,cudaMemcpyHostToDevice); }

else if (tid==n_gpus-1)

{ cudaMemcpy(&v[1],linef,sil,cudaMemcpyHostToDevice); }

else

{ cudaMemcpy(&v[1+(n1+1)*(n2+2)],linel,sil,cudaMemcpyHostToDevice);

cudaMemcpy(&v[1],linef,sil,cudaMemcpyHostToDevice); }

}

}

The full code for the multi-gpu simulation of diffusion in the files main_diff_omp.c and diff_multi_gpu can be found

here.